SP REST API Cookbook

SP v8.4

API v4

Table of Contents

- 1. Introduction

- 2. Set-up

- 3. Reports

- 3.1. Example: Time between an Alert and a Mitigation

- 3.2. Example: The Collector with the most system alerts

- 3.3. Example: Plotting Alert Data

- 3.4. Example: Using Alert Details to create radar plots of alert characteristics

- 3.5. Example: Attacks Grouped by CIDR Block

- 3.6. Example: Differences in output between accounts with different authorization

- 3.7. Using the SP REST API to write a client that supports caching (or Why the Sub-Object JSON is so Deeply Nested)

- 4. Configuration

- 5. Operations

- 6. Insight

- 7. Examples in Languages other than Python

- 8. Appendices

1 Introduction

Arbor Networks SP is a very sophisticated piece of software that gives visibility into the network traffic on networks ranging up to those that are massively large; SP support hundreds of configuration parameters for defining what exactly visibility means and the reported data from SP is very rich and very broad. Almost no network operators who use SP make use of the entire range of its capabilities.

The configuration of SP and the management of its data is done in two ways: the SP web-based UI and the web-based API interfaces to SP. While SP has had APIs for many versions, the versions starting with 8.1 have had a REST API as one of the options for configuration and data retrieval.

This book describes how to programmatically configure SP and get data about your network from SP using a json:api-based REST API to SP.

REST is a common, HTTP-based protocol that defines web-based services that allow clients (sometimes called “requesting systems”) to access and manipulate textual representations (in the case of SP, JSON representations) of SP configuration and reporting data (generically called “Web resources”) using a uniform and predefined set of stateless, from the client’s perspective, operations. All of the state, configuration and data, is maintained within SP, not by the API client. The API described here is the SP REST API. SP has other APIs (SOAP and WebServices) that are documented in other SP documentation. The intent of the SP REST API is to, over time, include all of the functionality of the SOAP and WebServices API, at which point those will be deprecated.

The SP REST API uses a particular API-focused JavaScript Object Notation (JSON) format to represent its data. JSON is a textual representation of data in collections (Python calls these dictionaries, C calls them structures, Go calls them maps, Ruby and Perl call them hashes) and lists (Python and Perl also calls them lists, Go calls them slices, C and Ruby call them arrays). The elements in collections and lists are other collections, other lists, Boolean values, numeric values, or string values as described by the JSON specification (http://www.json.org/). The particular JSON representation that the SP REST API adheres to is called json:api (http://jsonapi.org/); the rules and guidelines described in Version 1.0 of the json:api specification are implemented for the SP REST API.

The SP REST API is under development. Each release of Arbor Networks SP after version 8.0 includes a REST API that has more features than the prior release. Arbor Networks expects that the REST API will not be complete for several years, but incremental steps will be included in each version of SP. This book describes Version 3 of the API that is included in SP 8.3. (The versioning mechanism is described in more detail in the section 1.3.)

This book is intended for the SP administrator or advanced user who has some programming or shell scripting experience and who wants to automate network management or reporting tasks, or combine SP data with other sources of data for reporting, configuration, or decision making.

1.1 Conventions Used in this Book

Things in a fixed-width font are either input or output, for the most part.

See the section 8.4 for advice on how to provide feedback.

This is a collaborative document, feedback, additions, edits, changes, modifications, etc. are all greatly appreciated. Please see the section 8.6 for advice on ways to contribute.

The examples that were written for a version of SP and its REST API

will report their version in both their meta object and in the

URLs; those examples are not generally reproduced for the latest

version, but also should continue to work even on the latest

version (as well as on the prior version of the API in newer

versions of SP. Put another way, if you see an example that uses a

URL with a specific /v[VersionNumber]/ in it or example output

that includes a meta object like

"meta": {

"api": "SP",

"api_version": "3",

"sp_build_id": "HGRD",

"sp_version": "8.3"

}

you can, in probably all cases, use the latest version of SP and the REST API safely. Alternatively, you can use the latest version of SP and the specified version of the REST API.1

1.2 SP APIs

Arbor Networks SP has three officially supported types of APIs: the SOAP API, the WebServices API, and the REST API.

The SOAP and WebServices API are documented in the online SP documentation and in the API software development kit available from the SP web UI at the Administration > Download Arbor API SDK menu item.

Arbor Networks has decided to focus on the REST API (the topic of this book) for its future development with the goal of encompassing the functionality of the other APIs, the SP command-line interface, and all of the functionality provided by the web-based UI that is the most familiar to SP users.

As you develop clients for SP’s APIs, keep in mind that the REST API is the officially preferred API for SP. However, in this version (and, likely, several upcoming versions), the REST API does not provide all of the functionality of the SOAP and WebServices APIs. Arbor recommends using the REST API where you can in your clients, and augmenting it with API requests to the other APIs, replacing those requests as the REST API gains functionality.

1.3 Guiding Principles of the SP REST API

As Arbor Networks develops the REST API, we abide by some standards and principles that you can rely on as you you create API clients.

1.3.1 JSON API

The first of these guidelines is that the structure of the JSON that is used for input and output from the API follows the JSON API specification (http://jsonapi.org/). While you don’t need to understand the specification to use the SP REST API, it may help answer some questions about why things are the way they are in the API, and how Arbor is likely to handle future additions to the API.

1.3.2 Useful Errors for Humans and Computers

The second guideline is that the SP REST API should provide you with useful error messages that can be interpreted by both a human and a computer program. Arbor makes use of a combination of HTTP response codes as described in Section 10 of RFC 2616 (https://www.ietf.org/rfc/rfc2616.txt) and error objects that contain some or all of an error title string, an error detail string, an HTTP status code string, and a pointer to the error in the input. An example error object that resulted from the REST client requesting a nonexistent endpoint is:

{

"detail": "Resource https://leader.example.com/api/sp/asdf could not be found.",

"meta": {

"api": "SP",

"api_version": "2",

"sp_build_id": "HGRD",

"sp_version": "8.3"

},

"status": "404",

"title": "Missing resource error."

}

This error object contains the error detail, status code, and

title along with the standard "meta" section which gives

information about the API itself. Error objects are further

described in the JSON API specification in the Errors section

(http://jsonapi.org/format/#errors).

1.3.3 Backward compatibility

Another guideline is that the REST API will maintain backward-compatibility as it increases in version. The version for the API is a combination of the SP version and the API version number; in the example above the API version would be reported as “SP v8.3, API v2”. It is possible that there will be an API v2 for SP v8.2.3 that is different from the API v2 for SP v8.3. Arbor Networks will retain at least one prior version of the API in each version of SP so if you have written clients that use v3 of the API in SP v8.4 and upgrade to SP v8.5 that defaults API v4, you can still access API v3 in SP v8.5 with your clients while you work to migrate them to the latest version. API version increases will happen when Arbor Networks introduces a change in the API that could potentially break existing clients; this includes things like changing the name of an API key, changing the type of an API value, or removing an API endpoint. Adding keys and endpoints is not considered breaking, as well-written API clients should ignore extra data returned to them.

1.3.4 Discoverability

Arbor Networks makes every effort to ensure the SP REST API is

fully discoverable from the root URL, /api/sp/. Starting there,

every response you receive may contain an object called

relationships that will lead you to the related objects. There

are no detached nodes of the API tree. This is called Hypermedia

as the Engine of Application State (HATEOAS) and is defined2

by Roy T. Fielding (the inventor of REST) as:

A REST API should be entered with no prior knowledge beyond the initial URI (bookmark) and set of standardized media types that are appropriate for the intended audience (i.e., expected to be understood by any client that might use the API). From that point on, all application state transitions must be driven by client selection of server-provided choices that are present in the received representations or implied by the user’s manipulation of those representations. The transitions may be determined (or limited by) the client’s knowledge of media types and resource communication mechanisms, both of which may be improved on-the-fly (e.g., code-on-demand).

In the case of the SP REST API, the “initial URI” is

https://sp-leader.example.com/api/sp/ and following that

the elements in the links objects provide connectivity to the

rest of the API.

1.4 Online Reference Manual

The SP REST API includes a reference manual for each endpoint with a description of each key in that endpoint; there are very few examples of using the API in the reference manual (hence this book), but all of the endpoints and their properties are listed for all of the versions of the API supported on the installed version of SP.

In addition, changes between versions are in the Deprecation Policy section of the reference manual.

This book will often make reference to the online reference manual; having access to that manual will be helpful as you develop clients. The online reference manual is available from the Arbor SP UI by selecting the “REST API Documentation” from the “Administration” menu. A PDF copy of this is available from your local engineer or from the Arbor Technical Assistance Center.

2 Set-up

2.1 Permissions, Access Tokens, and SSL Certificates

The current version of the SP REST API does not support

authorization. That is, granting someone an API access token is

the same as granting them “admin” access to the SP deployment.

2.1.1 Access Tokens

SP REST API tokens are created from the SP command line interface with the command:

/ services aaa local apitoken generate admin "comment"

where admin is the username of the administrative user and

"comment" is a useful comment for you. Arbor recommends the

local name of the user and the date the token is created, for

example:

/ services aaa local apitoken generate admin "fred@example.com 2017-07-01"

You can list the tokens that have been created with the command:

/ services aaa local apitoken show

which will result in a list of API tokens and their associated comments, something like this:

admin: wz8SmDeJRSkz_0kNbdSajSQ_Jk82EVOqRU6CPU_O susy@example.com - 2017-06-01 YK3va5ATpXdmTWwfgzZPBckrj7zue205CzjLBtK5 fred@example.com - 2017-07-01

To remove the token YK3va5ATpXdmTWwfgzZPBckrj7zue205CzjLBtK5 type

the command:

/ services aaa local apitoken remove YK3va5ATpXdmTWwfgzZPBckrj7zue205CzjLBtK5

2.1.2 SSL Certificates

All SP REST API requests should use the secure HTTP transport

(https). This will ensure that your API key and the contents of

requests and responses are encrypted. In addition, SP will

attempt to redirect unencrypted HTTP requests to the encrypted

port, but that can affect the use of the POST and PATCH options.

SP comes with its own SSL certificate, and your organization may

also add their own; to verify that your communications are secure,

you will need a copy of that certificate. The person who

maintains your SP deployment should be able to provide that to

you; if you have shell access to the SP leader, the file

containing the certificates is at /etc/ca-bundle.crt. Copying

that file to your API client’s environment will allow you to refer

to it. In the examples in this book that file is referred to at

certfile in most places.

2.2 Useful Tools

When working with REST APIs there are several common actions that you will take: getting data from the API, processing retrieved data, and sending data to the API. There are many free programs that can help you do this in an interactive way. This can be useful during development of more complicated programs, for quick API queries that don’t require a whole program, for use in a shell script, or for interactively working the SP REST API.

Examples later in this book will make use of at least some of these tools.

2.2.1 Any web browser

The SP API is available via any web browser by simply going to a

URL of the form https//spleader.example.com/api/sp/; this

will present a nicely formatted web page showing the request and

the results. It’s not as convenient as other tools (cURL or

HTTPie, see the following section) for actions other than GET, but

as a quick test of an API request, it can be very handy. An

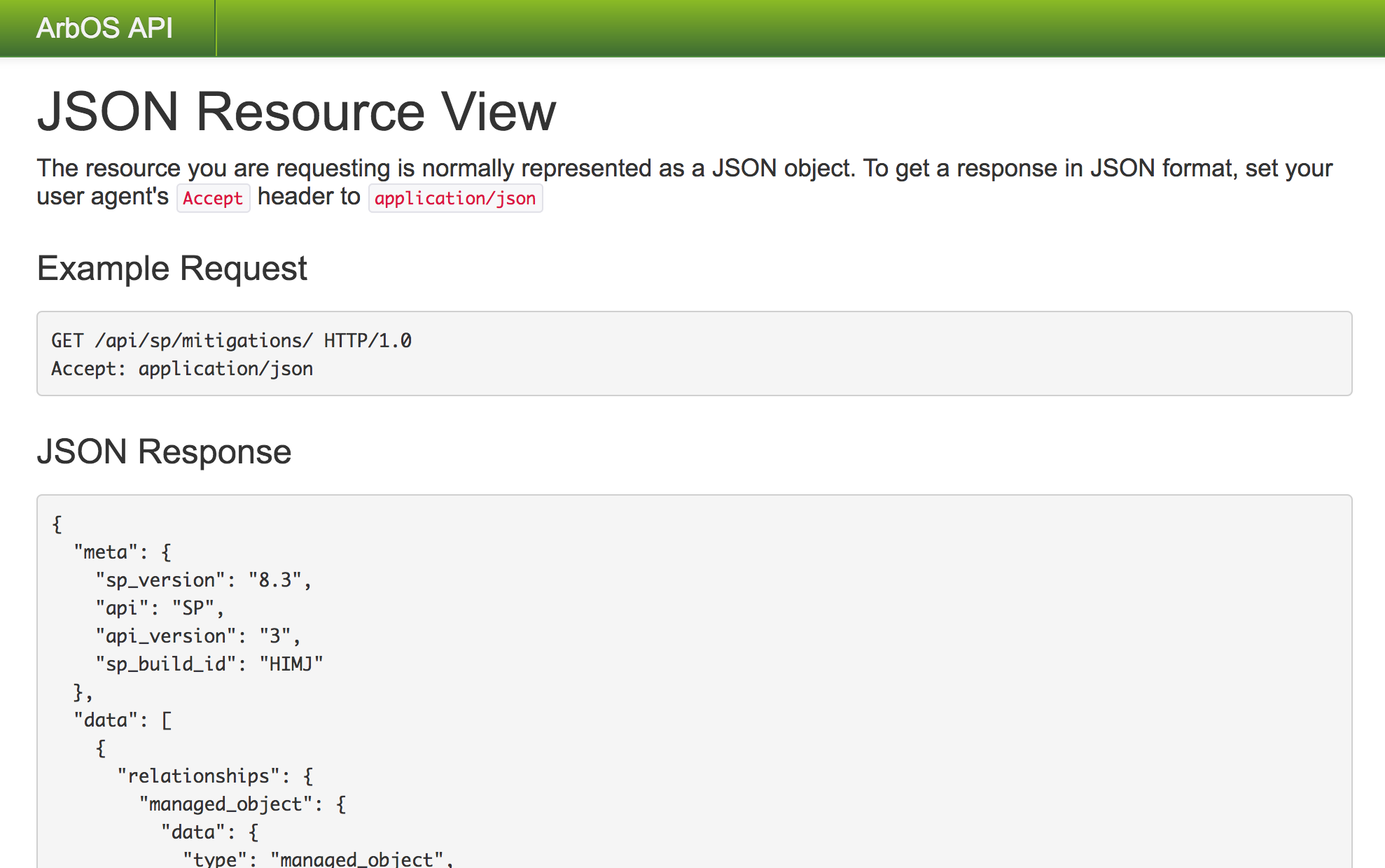

example of how it looks is in Figure 1.

Figure 1: The SP REST API can be accessed using a web browser and the query and results are displayed nicely.

2.2.2 cURL

cURL is a classic program (dating back to 1997) for accessing HTTP services from the command line. It is open source and available for free from the main cURL website http://curl.haxx.se or via package managers for your platform. cURL is available for 34 different operating systems, so should work in almost all scenarios. There are more modern and feature-rich tools for accessing REST APIs (or other HTTP services), but cURL is nearly ubiquitous.

- Getting data with cURL

An example of using cURL to get data from SP via the SP REST API is:

curl --cacert certfile --ssl -X GET -H "Content-Type:application/vnd.api+json" -H "X-Arbux-APIToken:wz8SmD3JRSKz_0kNbdSejSQ_Jk92EVOqRU6CPU_O" https://leader.example.com/api/sp/

In order of appearance, the options used in the

curlcommand above are:--cacert certfiletellscurlto use the SSL certificate calledcertfile(more on this later)--ssltellscurlto use Secure Sockets Layer (SSL) for its connection-X GETtellscurlto issue an HTTPGETcommand (more on this later, too)-H "Content-Type:application/vnd.api+json"sends the HTTP headerContent-Typeset to the value ofapplication/vnd.api+json. Other than the endpoints at/insight/, all of the SP API uses this content type-H "X-Arbux-APIToken:wz8SmD3JRSKz_0kNbdSejSQ_Jk92EVOqRU6CPU_O"sends the HTTP headerX-Arbux-APITokenset to the value of the API key that was set via the SP command linehttps://leader.example.com/api/sp/which is the URL (hostname and endpoint) that is requested. In this case, it is the index endpoint for the API.

The output of this particular command is the index of the SP REST API represented as a JSON object (defined by the outer-most curly braces) with two JSON objects in it,

metaandlinks.Successful requests for data from the SP REST API will return the requested data along with the HTTP status code

200 OK. - Sending data with cURL

An example of using cURL to send data to SP via the SP REST API is:

curl --cacert certfile --ssl -X POST -H "Content-Type:application/vnd.api+json" -H "X-Arbux-APIToken:wz8SmD3JRSKz_0kNbdSejSQ_Jk92EVOqRU6CPU_O" -d @input.json https://leader.example.com/api/sp/alerts/12519/annotations/

In order of appearance, the options used in the

curlcommand above are:--cacert certfiletellscurlto use the SSL certificate calledcertfile(more on this later)--ssltellscurlto use Secure Sockets Layer (SSL) for its connection-X POSTtellscurlto issue an HTTPPOSTcommand (more on this later, too)-H "Content-Type:application/vnd.api+json"sends the HTTP headerContent-Typeset to the value ofapplication/vnd.api+json. Other than the endpoints at/insight/, all of the SP API uses this content type-H "X-Arbux-APIToken:wz8SmD3JRSKz_0kNbdSejSQ_Jk92EVOqRU6CPU_O"sends the HTTP headerX-Arbux-APITokenset to the value of the API key that was set via the SP command line-d @input.jsontells cURL that the data to be sent can be found in the file namedinput.jsonhttps://leader.example.com/api/sp/alerts/12519/annotations/which is the URL (hostname and endpoint) to which the data will be sent. In this case, it is an alert annotation for the alert with id 12519.

Although this will be discussed in more depth later in this book, the format of the data for an alert annotation (in this example, the contents of the file

input.json) is:{ "data": { "attributes": { "author": "The name of the author", "text": "The text of the annotation" } } }Using the HTTP verb

PATCHto change data is very similar to how thePOSTverb is used.Successful API

POSTandPATCHrequests will return all of the data for the object that was created or modified along with either a200 OKor201 Createdstatus code. - Deleting data with cURL

An example of using cURL to delete data from SP via the SP REST API is:

curl --cacert certfile --ssl -X DELETE -H "Content-Type:application/vnd.api+json" -H "X-Arbux-APIToken:wz8SmD3JRSKz_0kNbdSejSQ_Jk92EVOqRU6CPU_O" https://leader.example.com/api/sp/routers/132

In order of appearance, the options used in the

curlcommand above are:--cacert certfiletellscurlto use the SSL certificate calledcertfile(more on this later)--ssltellscurlto use Secure Sockets Layer (SSL) for its connection-X DELETEtellscurlto issue an HTTPDELETEcommand (more on this later, too)-H "Content-Type:application/vnd.api+json"sends the HTTP headerContent-Typeset to the value ofapplication/vnd.api+json. Other than the endpoints at/insight/, all of the SP API uses this content type-H "X-Arbux-APIToken:wz8SmD3JRSKz_0kNbdSejSQ_Jk92EVOqRU6CPU_O"sends the HTTP headerX-Arbux-APITokenset to the value of the API key that was set via the SP command linehttps://leader.example.com/api/sp/routers/132which is theURL (hostname and endpoint) that identifies what resource in SP will be deleted. In this case, it is the router with id 132.

API delete requests do not return any data, since the SP object you were acting on is now gone. The HTTP status code that will be returned for successfully deletions is

204 No Content.

2.2.3 HTTPie

HTTPie is a Python program that is much newer than cURL (although has still seen a lot of development; the initial commit to Github was on 25 February 2012) and has some conveniences that cURL doesn’t. The biggest convenience, in my opinion, is the ability to save configuration data for a host, and select which host configuration to use when the the command is run.

HTTPie is open source and available for free from https://httpie.org/ or via package managers for your platform. HTTPie is based on Python, so is available for any platform that supports Python. There are installation instructions for MacOS, Linux, and Windows at https://httpie.org/doc#installation.

Among the Arbor Networks engineers, HTTPie is widely used.

- Configuring HTTPie

There is much more information on the configuration of HTTPie in its online documentation, but the two things that might be useful are setting the defaults and creating some sessions.

HTTPie’s configuration is kept in a simple JSON-formatted file called

config.json(see https://httpie.org/doc#config-file-location for more); a good start for configuration is:{ "__meta__": { "about": "HTTPie configuration file", "help": "https://github.com/jkbrzt/httpie#config", "httpie": "0.9.4" }, "default_options": [ "--verify=/path/to/a/ssl/certfile", "--session=spleader.my.example.com", "--timeout=60", "--follow" ] }The

"__meta__"section comes with HTTPie, and the default options set:- the path to a SSL certificate file bundle

- the default connection information (what HTTPie calls a session)

- a useful timeout value of 60 seconds

- the option to follow HTTP redirects

Once you have created

config.jsonyou can add some information to the saved session by typing all of the information once on the command line. The information the SP REST API needs is theContent-TypeandX-Arbux-APITokenheaders; this is entered by typing:http https://spleader.example.com/api/sp X-Arbux-APIToken:wz8SmD3JRSKz_0kNbdSejSQ_Jk92EVOqRU6CPU_O Content-Type:application/vnd.api+json

(replacing, of course, the hostname and API token in that example with your own hostname and API token). After you do that once, you can then type:

http https://spleader.my.comany.com/api/sp

and the configuration will read the data from the saved file. Save session information is in the

.httpie/sessions/directory and are JSON files in directories named after the host. - Getting data with HTTPie

Replicating the cURL example from earlier, getting the SP REST API index using HTTPie, after following the configuration steps, is done using the command:

http GET https://spleader.example.com/api/sp/

When HTTPie detects that is is writing to the terminal, it will include additional information that might look about like:

HTTP/1.1 200 OK

Connection: Keep-Alive

Content-Length: 1593

Content-Security-Policy: default-src ’self’ ’unsafe-inline’ ’unsafe-eval’

Content-Type: application/vnd.api+json

Date: Tue, 01 Aug 2017 00:55:31 GMT

Keep-Alive: timeout=15, max=10000

P3P: policyref=“/w3c/p3p.xml”, CP=“NOI DSP COR TAIa OUR NOR UNI”

Server: Apache

Strict-Transport-Security: max-age=1261440000

Via: 1.1 127.0.0.1

X-Frame-Options: SAMEORIGIN

when HTTPie thinks it is directing its output somewhere else, it will not print this information; if you want to override HTTPie’s decisions about this, there are command-line options to do so.

- Sending data with HTTPie

An example of using HTTPie to send the same data to SP we sent via the SP REST API is using cURL earlier is (see

input.jsonin the cURL example of sending data to SP):cat input.json | http POST http https://spleader.example.com/api/sp/alerts/12519/annotations/

The two differences between getting and sending data to SP via the REST API using HTTPie are:

- using the

POSTverb instead of theGETverb before the URL - the data you want to send to SP via the REST API is in the

same format as in the cURL example, but it is piped into the

httpcommand

To make changes to SP via the REST API, you can use the

PATCHverb to HTTPie instead ofPOST. There will be more examples of this later. - using the

- Deleting data with HTTPie

Repeating the example of deleting data from the cURL section, the syntax for using HTTPie to delete data from SP via the SP REST API is:

http DELETE https://spleader.example.com/api/sp/routers/132

The SP REST API will return the HTTP status code

204 No Contentand HTTPie will print this if you aren’t piping or redirecting the output somewhere else.

2.2.4 Postman

Postman is an application that provides a graphical user interface for working with REST APIs. It is free to use the basic version, with the versions more suitable for development enterprises costing money. Postman is available from https://www.getpostman.com/ for MacOS, Linux, and Windows. It is also available in the Chrome App Store as a plug-in for the Google Chrome web browser3.

Among the Arbor Networks engineers, Postman is widely used.

- Configuring Postman

If you are installing the Postman client there are three configuration settings you need to make before using it with the SP REST API; adding the certificate bundle and adding the

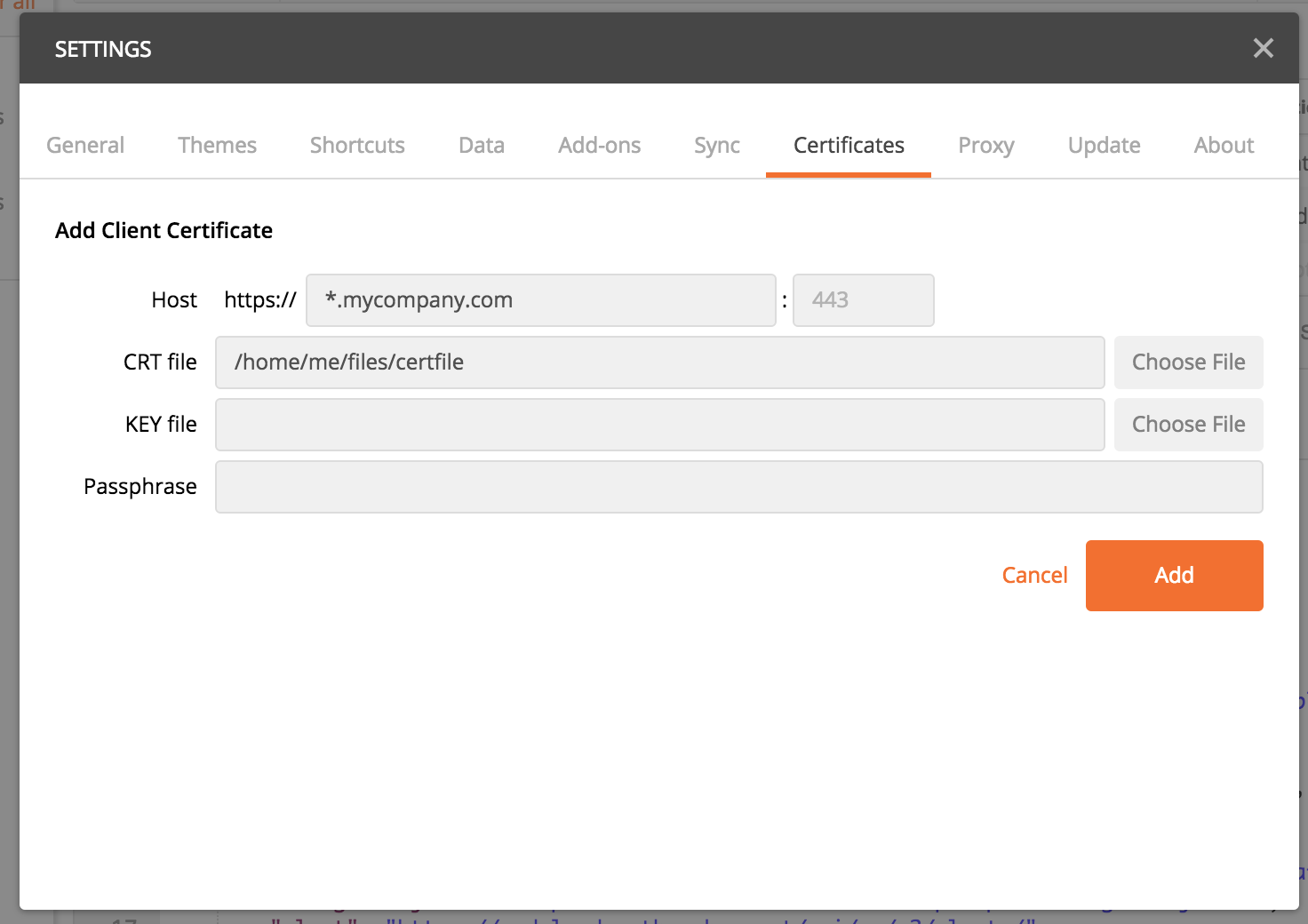

Content-TypeandX-Arbux-APITokenheaders. If you are using the Postman Chrome app, you don’t need to add the certificates; Postman will use those in your browser, but you may still have to add them to your browser.To add the SP certificate bundle to the Postman application, select the wrench icon in the upper right part of the Postman window and choose Settings from the menu. In the resulting window, choose the Certificates option from the top row of options, then click Add Certificate. In the Host text entry field, type

*.mydomain.com(replacingmydomain.comwith the domain where your SP leader is), and in the CRT File text entry field type the path to the certificate bundle for your SP environment. It should look something like what is shown in Figure 2.

Figure 2: The Postman certificate configuration window should look similar to this, with your information filled in; if you are using the Chrome app, you won’t see the Certificates option in the top row; Postman will use your browser’s certificates.

For both the application and Chrome app you will need to set the

Content-TypeandX-Arbux-APITokenheaders. This is done in the Headers section that is immediately below the URL entry field (after making a request, there will be another Headers section for the response). You simply type in each key and the values for them. For all of the SP REST API endpoints except those under/insight/you must setContent-Typetoapplication/vnd+api.json; for the endpoints under/insight/you must setContent-Typetoapplication/json. - Getting data with Postman

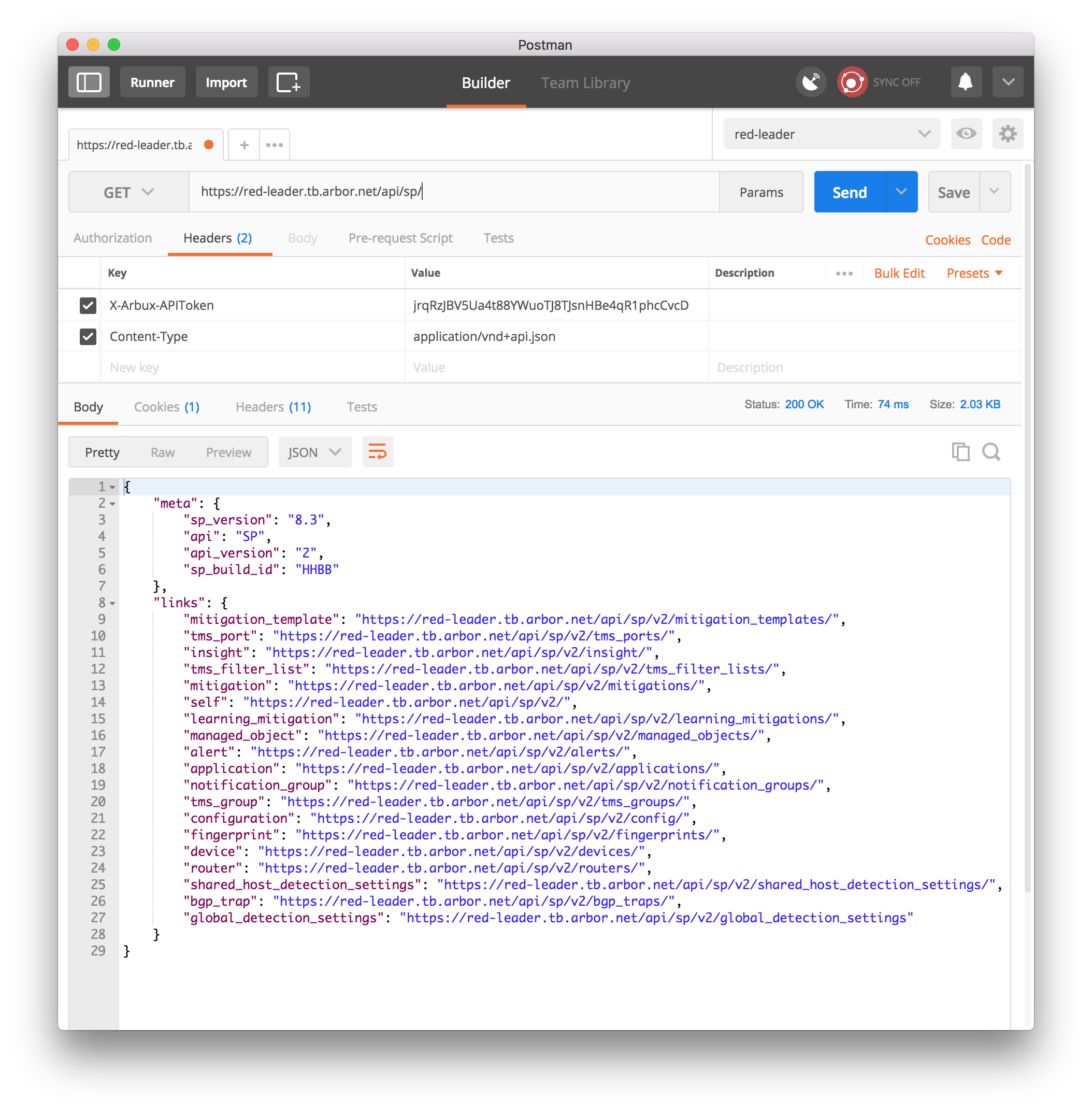

Following the cURL and HTTPie examples, to get the SP REST API index using Postman you make sure that

GETis selected to the left of the text entry box that says “Enter request URL”, then enter the URL for the index endpoint (https://spleader.example.com/api/sp/) and press the Send button. The results (the body, cookies, and headers) will appear in the bottom pane. It will look something like what is shown in Figure 3.

Figure 3: Retrieving data from the SP REST API using Postman is done by setting the two headers shown, entering a URL, and pressing the Send button. The data retrieved is displayed in the lower pane.

- Sending data with Postman

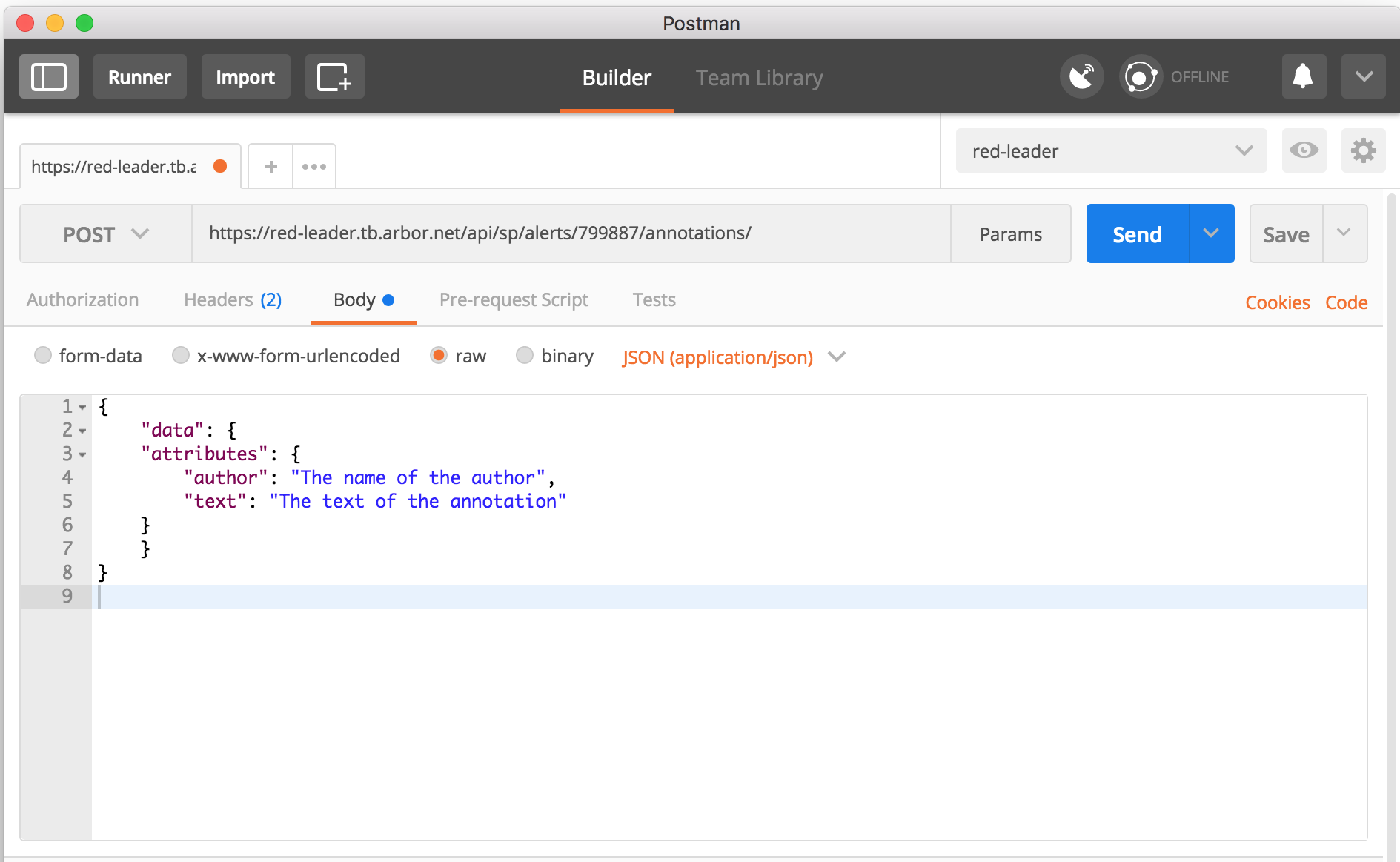

Once again using the same

input.jsonfile from earlier, we will set an annotation on an alert, this time using Postman.The steps in Postman to get ready to send date are:

- change the HTTP verb to the left of the URL from

GETtoPOST - enter the URL to which you are sending the data in the text entry box that says “Enter request URL”

- select Body from the items below the URL

- select raw from the items above the text entry box

- select JSON from the dropdown to the right of type selections

- enter the JSON body you are sending in the text box.

Having done all of those steps, you should have something that looks like Figure 4.

Figure 4: Sending data to SP using the REST API and Postman is done by setting the HTTP verb to POST, filling in the URL, selecting the Body option, then the raw option and the JSON type, and filling in the JSON body. Once that is complete, press the Send button.

After sending the body with the correct settings, the results will appear in the bottom text area in Postman.

- change the HTTP verb to the left of the URL from

- Deleting data with Postman

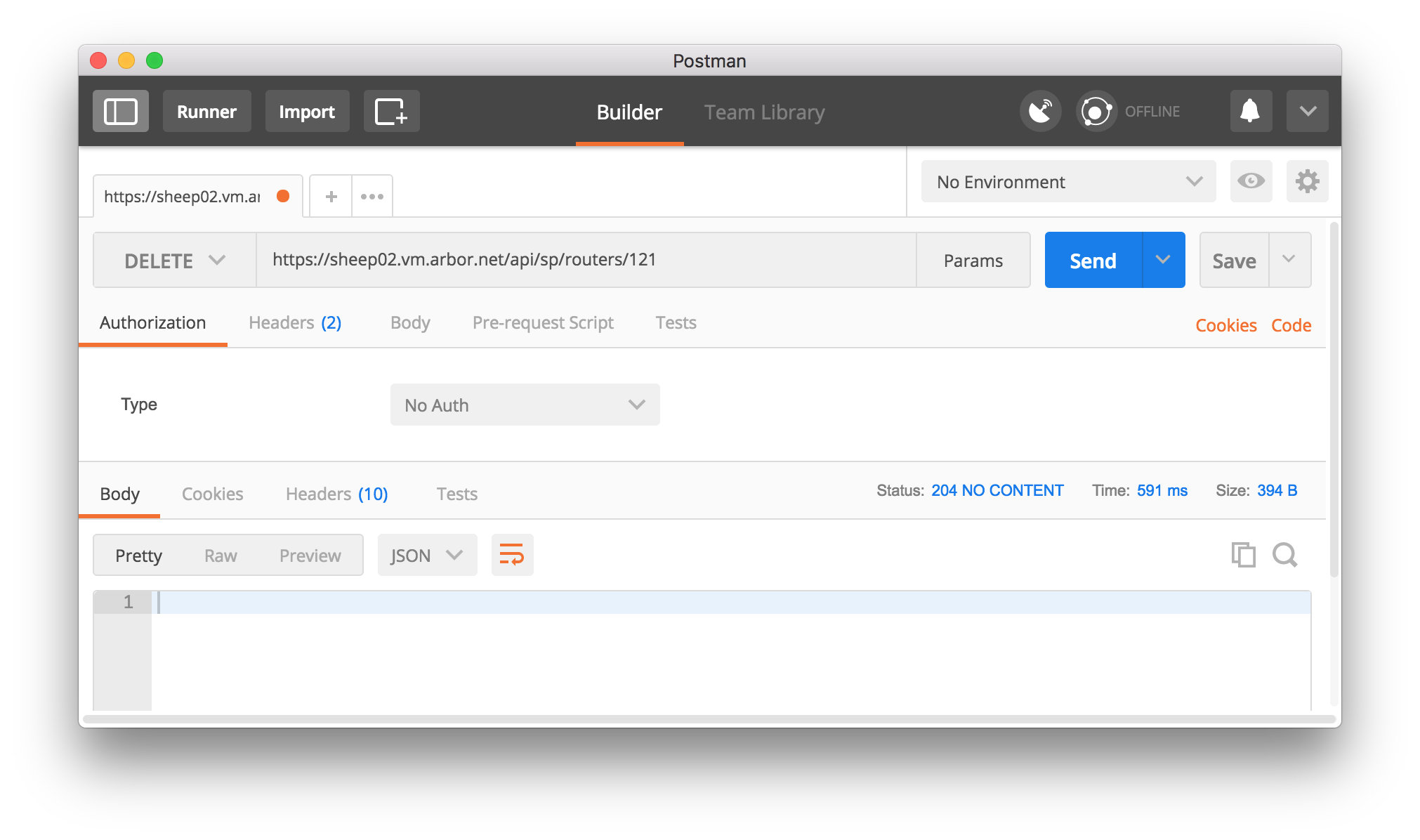

Repeating the example of deleting data from the cURL and HTTPie sections, the method for using Postman to delete data from SP via the SP REST API is to enter the URL for the object you want to delete, change the HTTP verb to Delete, and press the Send button.

The SP REST API will return the HTTP status code

204 No Contentand no body content.After a successful DELETE operation you should have something that looks like Figure 5.

Figure 5: Deleting data from SP using the REST API and Postman is done by selecting the

DELETEHTTP verb from the menu, entering the URL of the item to be deleted and pressing Send. There will be no content in returned but on the right side just above the (empty) returned data box the status will read204 NO CONTENT.

2.2.5 jq

jq is a JSON parsing and manipulation tool in the spirit of

what sed4 and awk5 are for text.

jq is free and open-source; it is available at

https://stedolan.github.io/jq/ for MacOS, Linux, and Windows or

via package managers for your platform. The C source code and

build instructions are available at https://github.com/stedolan/jq

for other platforms.

jq is very powerful, but complicated. It can be used to extract

subsets of data from JSON input, search for keys or values in JSON

data and only print the objects that match the search criteria,

select and recast data from JSON input into different JSON

output. The complete manual is available at the jq website, but

we will provide a few examples of using jq with the JSON from

the SP REST API.

- Extracting only names, descriptions, and ids of routers into a new JSON format

The HTTPie command to get the router information from SP using the REST API is:

http https://leader.example.com/api/sp/routers/

but on a system with 12 routers this results in 503 lines of JSON output. If all you need is the id, name, and description of each router, that is a lot of information to deal with.

Using

jqwe can extract the 36 pieces of data that we need.Looking at the original output from the

/routers/endpoint, we need to make note of two things: the information we want is in a list of objects in the"data"object; the fields we want are at the top level of each data object ("id") and in the"attributes"sub-object for each data object.The first thing we can do is have

jqprint each object in the"data"list by giving it the filter.data[]which means “from the top level (.) print every element[]in the"data"array”. In practice this looks like:http https://leader.example.com/api/sp/routers/ | jq '.data[]'The

jqfilter is in single-quotes to prevent the square brackets from being interpreted by the shell.jquses periods to denote the JSON hierarchy (that’s why.datastarts with a period, it is the root of the JSON tree), the pipe (|) character to chain together its filters, and commas to group elements together for selection.So the next thing we can do is extract one element from the JSON output of the SP REST API by adding it to the

.data[]filter. To get the names of the routers we can add.attributes.name(remember,"name"is in the"attributes"subobject of data. On the command line this looks like:http https://leader.example.com/api/sp/routers/ | jq '.data[].attributes.name'This will print a list of the names of all of the routers configured in SP. This is part of our original goal, but we still also want the id and description of the routers.

To select more than one element,

jqsupports sending each list item over which it is iterating to a group of elements for selection. To get the router id and description along with the name, we can type:http https://leader.example.com/api/sp/routers/ | jq '.data[] | .id,.attributes.name,.attributes.description'This will then print a list that is something like:

"121" "rtr1.nyc" "a router in New York" "122" "rtr2.chi" "a router in Chicago" "123" "rtr3.lax" "a router in Los Angeles" "124" "pigeon.net" "See RFC 1149"

where the order of the elements is the same order as they were requested in the filter. But this isn’t very easy to read, and is not very useful as input to computer programs.

jqcan do better.To make JSON-compliant output that is easy to read and easy for another computer program to ingest,

jqneeds to know how things should be grouped.http https://leader.example.com/api/sp/routers/ | jq '{"routers": [.data[] | {id:.id,name:.attributes.name,description:.attributes.description}]}'will print a list that looks something like:

{ "routers": [ { "id": "121", "name": "rtr1.nyc", "description": "a router in New York" }, { "id": "122", "name": "rtr2.chi", "description": "a router in Chicago" }, { "id": "123", "name": "rtr3.lax", "description": "a router in Los Angeles" }, { "id": "124", "name": "pigeon.net", "description": "See RFC 1149" } ] }While this example is for four routers, with the original example of 12 routers we have gone from 503 lines of JSON output to 64 lines, removing the 87% of the data we didn’t need using one filter in

jq. - Extracting alerts that have a severity percentage above 200

jqcan also look for certain properties of objects and print only the objects that have those properties.For example, a typical alert from the SP REST API looks like:

{ "data": { "attributes": { "alert_class": "dos", "alert_type": "dos_host_detection", "classification": "Possible Attack", "importance": 2, "ongoing": true, "start_time": "2017-08-01T19:44:45+00:00", "subobject": { "fast_detected": false, "host_address": "192.168.12.203", "impact_boundary": "rtr1.nyc", "impact_bps": 8251040, "impact_pps": 3010, "ip_version": 4, "misuse_types": [ "icmp", "total", "dns", "udp" ], "severity_percent": 153, "severity_threshold": 1000, "severity_unit": "bps", "summary_url": "/page?id=profile_summary&gid=154" } }, "id": "799318", "links": { "self": "https://leader.example.com/api/sp/v3/alerts/799318" }, "relationships": { "annotations": { "data": [ { "id": "1492098", "type": "alert_annotation" }, { "id": "1492097", "type": "alert_annotation" }, { "id": "1492096", "type": "alert_annotation" }, { "id": "1492095", "type": "alert_annotation" }, { "id": "1492094", "type": "alert_annotation" }, { "id": "1492093", "type": "alert_annotation" } ], "links": { "related": "https://leader.example.com/api/sp/v3/alerts/799318/annotations/" } }, "device": { "data": { "id": "115", "type": "device" }, "links": { "related": "https://leader.example.com/api/sp/v3/devices/115" } }, "managed_object": { "data": { "id": "154", "type": "managed_object" }, "links": { "related": "https://leader.example.com/api/sp/v3/managed_objects/154" } }, "packet_size_distribution": { "data": { "id": "packet-size-distribution-799318", "type": "alert_packet_size_distribution" }, "links": { "related": "https://leader.example.com/api/sp/v3/alerts/799318/packet_size_distribution" } }, "patterns": { "links": { "related": "https://leader.example.com/api/sp/v3/alerts/799318/patterns/" } }, "router_traffic": { "links": { "related": "https://leader.example.com/api/sp/v3/alerts/799318/router_traffic/" } }, "traffic": { "data": { "id": "alert-traffic-799318", "type": "alert_traffic" }, "links": { "related": "https://leader.example.com/api/sp/v3/alerts/799318/traffic" } } }, "type": "alert" }, "links": { "self": "https://leader.example.com/api/sp/v3/alerts/799318" }, "meta": { "api": "SP", "api_version": "2", "sp_build_id": "HHAB", "sp_version": "8.3" } }which is a lot of information to parse; using

jqyou can filter the data after the API returns it to you (there is some filtering available via the API that happens on the SP side before returning data to you, but it is limited; this will be discussed later).If you want only the alerts out of the most recent 50 that have severity percents (in

jqpath representation,.data[].attributes.subobject.severity_percentgreater than 200,jqcan select those alerts.The first step is the same as it was in the previous example, get each object out of the data list:

http https://leader.example.com/api/sp/alerts/ | jq '.data[]'The second step is to make the selection using

jq’s pipe functionality and put the results into a list by adding square brackets around the whole statement:http https://leader.example.com/api/sp/alerts/ | jq '[.data[] | select(.attributes.subobject.severity_percent>200)]'To verify that this is returning only alerts with a severity percent greater than 200 we can add

| .[].attributes.subobject.severity_percentto the queryhttp GET https://leader.example.com/api/sp/alerts/ | jq '[.data[] | select(.attributes.subobject.severity_percent>200)] | .[].attributes.subobject.severity_percent'301 374 262 342 374 402 342 443 373

These are only two examples of what

jqcan help you with when you are faced with a lot of JSON data, the manual and some experimentation will be very useful in usingjqin your particular environment.

2.3 The Python Environment

Most of the examples in this book will be in the Python (http://www.python.org) programming language—it is an easy-to-read language even if you aren’t familiar with it; Python is available for nearly every operating system; and it includes nearly everything you need (“The Python source distribution has long maintained the philosophy of ”batteries included“ – having a rich and versatile standard library which is immediately available, without making the user download separate packages. This gives the Python language a head start in many projects.”).

A standard Python installation includes everything you need to

access the SP REST API and process data from it—an HTTP library

(httplib) and a JSON library (json). However, the httplib

documentation (at https://docs.python.org/2/library/httplib.html)

says “The Requests package is recommended for a higher-level HTTP

client interface.”

The examples in this book will make significant use of the Requests library; you will be able to follow along with nearly all of the examples using only that additional library. If we use other libraries (for example, for accessing databases, creating web pages, etc.) we will go into more depth about those at the time.

2.3.1 The requests library

The Python Requests library documentation at https://requests.readthedocs.io/ purports:

Requests is the only Non-GMO HTTP library for Python, safe for human consumption.

and warns:

Recreational use of the Python standard library for HTTP may result in dangerous side-effects, including: security vulnerabilities, verbose code, reinventing the wheel, constantly reading documentation, depression, headaches, or even death.

Installation instructions for the requests library are at the

readthedocs site at

https://requests.readthedocs.io/en/master/user/install/, but

mostly comprise pip install requests. (And if you haven’t got

pip, see

http://docs.python-guide.org/en/latest/starting/installation/ for

installing it and a few other useful things).

Once you think you have the requests library installed, a simple

test is to follow the steps in this example (% is a Mac or Linux

shell prompt, and >>> is the interactive Python prompt):

% python

Python 2.7 (blah blah blah)

Type "help", "copyright", "credits" or "license" for more information.

>>> import requests

>>> rg = requests.get("http://www.google.com")

>>> rg

>>> <Response [200]>

>>> rg.headers

>>> {'Content-Length': '4740', 'X-XSS-Protection': '1; mode=block',

'Content-Encoding': 'gzip', 'Set-Cookie':

'NID=110=BXSpW6kxChyqif9p3Q0yFhL75QRsh0-C3vjFY_uTwpS-ANLlJsTjpC_9LypwgClOwL36COwCH6oAIBPfgcP-vZ4mwhpSqwM_UuG0pOpEGfDMDSKEtpK0mdHDaIqsEjR7;

expires=Sat, 24-Feb-2018 05:12:11 GMT; path=/;

domain=.google.co.nz; HttpOnly', 'Expires': '-1', 'Server': 'gws',

'Cache-Control': 'private, max-age=0', 'Date': 'Fri, 25 Aug 2017

05:12:11 GMT', 'P3P': 'CP="This is not a P3P policy! See

https://www.google.com/support/accounts/answer/151657?hl=en for

more info."', 'Content-Type': 'text/html; charset=ISO-8859-1',

'X-Frame-Options': 'SAMEORIGIN'}

If you get an error after you type import requests you haven’t

installed requests correctly, and may want to consult a local

expert for help. If you get a response other than <Response

[200]> after typing the requests.get line, you may not have

access to either http://www.google.com or to the Internet, both of

which would be a little odd, frankly, but that’s where you should

start looking.

2.4 Summary

At this point, you should be able to access your SP Leader’s REST

API using at least one of curl, httpie, or postman. You

might also be able to extract some sort of useful information using

the jq tool.

You should be able to make a simple HTTP request using Python and the Requests library.

The rest of this book will be examples of using these tools (and some examples in other programming languages) demonstrating how to do things with the SP REST API that will hopefully be a useful starting point for your own uses of the API.

3 Reports

The chapter describes how to produce reports from the data available from the SP REST API. This certainly isn’t an exhaustive list of reports you can produce, and the most interesting reports will be those you create by combining data from SP with data from other sources.

The difference between a report program and either configuration or

operational programs is that a report program only fetches data from

SP, it doesn’t not change the state of SP in any way (in

programmatic terms, the only HTTP verb used in these examples is

GET).

3.1 Example: Time between an Alert and a Mitigation

Arbor Networks SP can alert you to various kinds of network attacks by monitoring the network traffic across hundreds of routers and looking for anomalous behavior. In combination with Arbor Networks TMS applicances, these attacks can be mitigated by directing the odd traffic through a TMS for inspection and, if needed, cleaning.

SP and TMS support mitigating the anomalous traffic manually, where a network operator notices the alert, applies some other metrics to it, and then either decides to direct that traffic to a TMS or not. Monitoring the time between an alert and the manual mitigation can be useful. In addition, SP and TMS support automatic mitigation of traffic that generates an alert. Monitoring the time between the alert and the automatic mitigation can also be useful.

The steps to look at this are:

- establish a time period over which you want to produce this report

- collect the mitigations that were started in that time frame using the REST API

- extract from the mitigation information the related alerts

- collect the alert information about those specific alerts using the REST API

- for the group of manually started mitigations, collect the difference in start times for each mitigation

- do the same for the group of automatically started mitigations

Python supports command-line options very gracefully using the

included argparse library

(https://docs.python.org/2.7/library/argparse.html) but, for the

purpose of this example, we will simply hard-code what would

normally be options.

from __future__ import print_function import requests from datetime import datetime, timedelta from sys import stderr from time import mktime, strptime from urllib import urlencode from urlparse import urlparse, parse_qs CERT_FILE = "./certfile" TIMEFMT = "%a %b %d %H:%M:%S %Y" def get_mitigations(leader, key, start, end, page=1, perPage=50): """Recursively get pages of mitigations until the start date is reached or the last page is reached """ # set up the query string keys and values qs = dict() if page: qs['page'] = page if perPage: qs['perPage'] = perPage # build the URL MITIGATION_URI = '/api/sp/mitigations/?' URL = "https://" + leader + MITIGATION_URI URL += urlencode(qs) # make the API request api_response = requests.get( URL, headers={'X-Arbux-APIToken': key}, verify=CERT_FILE) # check the API response if api_response.status_code != requests.codes.ok: print("[ERROR] API responded with this error: " "{}\n(url: {})". format(api_response.reason, URL), file=stderr) return [] # convert the response to JSON and # get the 'data' element api_response = api_response.json() data = api_response['data'] # check if we're done or if we need # to call ourselves again specified_start = string_to_date(start) oldest_mit_on_this_page = None if 'start' in data[-1]['attributes']: oldest_mit_on_this_page = data[-1]['attributes']['start'] oldest_mit_on_this_page = string_to_date( oldest_mit_on_this_page) if (oldest_mit_on_this_page is None or oldest_mit_on_this_page > specified_start): # # parse out the last page from the links section # last_page = None if ('links' in api_response and 'last' in api_response['links']): last_page = int( parse_qs( urlparse( api_response['links']['last']).query )['page'][0] ) if last_page is not None and page < last_page: page += 1 # call ourselves again to get the next page d = get_mitigations(leader, key, start, end, page) data += d return data def get_alerts_from_mits(mits): """Not all mitigations have alerts, those will just be skipped, otherwise the alerts will be retrieved and a dictionary with mitigation information and alert start time will be populated and returned """ alerts = {} for mit in mits: if 'relationships' not in mit or 'alert' not in mit['relationships']: continue # there is no alert related to this mitigation if 'start' not in mit['attributes']: continue # mitigation was created but never started alert_id = mit[ 'relationships']['alert']['data']['id'] if alert_id not in alerts: alerts[alert_id] = {} mit_start_time = string_to_date( mit['attributes']['start']) alerts[alert_id][ 'mit_start_time'] = mit_start_time alerts[alert_id][ 'mit_type'] = mit['attributes']['subtype'] alerts[alert_id][ 'started_by'] = mit['attributes']['user'] return alerts def print_report(alerts, mitigations, sp_leader, start, end): """ print a simplistic report with a table of alerts """ print ("The time range for the report is") print (" from:", start.strftime(TIMEFMT)) print (" to:", end.strftime(TIMEFMT)) print ("Out of {} mitigations on {}, " "{} have associated alerts". format( len(mitigations), sp_leader, len(alerts.keys()) )) # re-organize alerts for printing by_user_type_time = {} for alert in alerts: started_by = alerts[alert]['started_by'] mit_type = alerts[alert]['mit_type'] a_to_m_secs = alerts[alert]['alert_to_mit_seconds'] if started_by not in by_user_type_time: by_user_type_time[started_by] = {} if mit_type not in by_user_type_time[started_by]: by_user_type_time[started_by][mit_type] = {} if a_to_m_secs not in by_user_type_time[started_by][mit_type]: by_user_type_time[started_by][mit_type][a_to_m_secs] = [] by_user_type_time[started_by][mit_type][a_to_m_secs].append(alert) # print the header row print ("{0:>20} | {1:<10} | {2:11} | {3}".format( "Mit. Started By", "Mit. Type", "Secs to Mit", "Alert Ids") ) print ("{0:>20} | {1:<10} | {2:11} | {3}".format( "-"*20, "-"*10, "-"*11, "-"*18) ) # Step through the re-organized data and print it for started_by in sorted(by_user_type_time): tmp_user = started_by for mit_type in sorted(by_user_type_time[started_by]): tmp_mit_type = mit_type for a_to_m_secs in sorted( by_user_type_time[started_by][mit_type]): print ("{0:>20} | {1:<10} | {2:11} | {3}". format( tmp_user, tmp_mit_type, a_to_m_secs, ", ".join(by_user_type_time[ started_by][ mit_type][ a_to_m_secs]))) tmp_mit_type = '' tmp_user = '' def get_alert_start_time(leader, key, alert): """Get an individual alert via the API and return its start time in python datetime format """ ALERT_URI = '/api/sp/alerts/' URL = "https://" + leader + ALERT_URI + alert api_response = requests.get( URL, headers={'X-Arbux-APIToken': key}, verify=CERT_FILE) if api_response.status_code != requests.codes.ok: print("[WARNING] In retrieving information " "about alert {}, the API responded `{}'". format(api_response.reason, alert), file=stderr) return None api_response = api_response.json() alert_start_time = string_to_date( api_response['data']['attributes']['start_time']) return alert_start_time def string_to_date(date_string): """Convert a string in the format YYYY-MM-DDThh:mm:ss to a Python datetime format """ if type(date_string) is datetime: return date_string # drop the time offset; if you are using different time zones, # don't do this date_string = date_string.split('+')[0] date_string = date_string.split('.')[0] # convert the time string into a Python datetime object date_string = strptime(date_string, "%Y-%m-%dT%H:%M:%S") date_string = datetime.fromtimestamp( mktime(date_string) ) return date_string if __name__ == '__main__': # # Set the start time to two weeks ago # and the end time to now # END_TIME = datetime.now() START_TIME = END_TIME + timedelta(-14) # # set the SP leader hostname and API key # SP_LEADER = 'leader.example.com' API_KEY = 'eFvokphdyGHA_M4oLlLtfDnlIf9bpjFnn0mWlDqw' mitigations = get_mitigations(SP_LEADER, API_KEY, START_TIME, END_TIME) if not mitigations: exit # # Create a dictionary of alert IDs that contains mitigation start # time and (later) the alert start time, the JSON of which would # look like: # { "<alert_id>": # { "mit_start_time": "<mitigation_start_time>", # "alert_start_time": "<alert_start_time>", # "mit_type": "{tms,blackhole,flowspec}" # } # } # alerts = get_alerts_from_mits(mitigations) alerts_to_remove = list() for alert in alerts: # Get the alert start time for each alert that appears in a # mitigation alerts[alert]['alert_start_time'] = get_alert_start_time( SP_LEADER, API_KEY, alert ) alert_start_time = alerts[alert]['alert_start_time'] if not alert_start_time: alerts[alert]['alert_to_mit_seconds'] = 'n/a' continue alert_start_time = string_to_date(alert_start_time) if alert_start_time < START_TIME or alert_start_time > END_TIME: alerts_to_remove.append(alert) # alerts[alert]['alert_to_mit_seconds'] = 'n/a' continue # Get the different in seconds between the # alert start and the mitigation start alerts[alert]['alert_to_mit_seconds'] = ( alerts[alert]['mit_start_time'] - alerts[alert]['alert_start_time'] ).total_seconds() # delete alerts from the list that are outside of the specific # time; this is needed because the mitigations come back in pages, # not necessarily scoped to the specified time frame for alert in alerts_to_remove: del alerts[alert] print_report(alerts, mitigations, SP_LEADER, START_TIME, END_TIME)

The time range for the report is

from: Tue Aug 29 10:45:05 2017

to: Tue Sep 12 10:45:05 2017

Out of 27 mitigations on leader.example.com, 24 have associated alerts

Mit. Started By | Mit. Type | Secs to Mit | Alert Ids

-------------------- | ---------- | ----------- | ------------------

admin | tms | 211.0 | 71

| | 213.0 | 72

| | 372.0 | 77

| | 481.0 | 65

| | 488.0 | 66

| | 497.0 | 67

| | 504.0 | 68

| | 551.0 | 1913

| | 558.0 | 1912

| | 740.0 | 75

| | 744.0 | 388

| | 819.0 | 387

| | 843.0 | 1910

| | 1255.0 | 1909

| | 1338.0 | 38

| | 1354.0 | 37

| | 1363.0 | 36

| | 1373.0 | 35

| | 2527.0 | 381

| | 4366.0 | 1865

| | 25051.0 | 294

| | 25058.0 | 295

| | 25065.0 | 292

| | 25072.0 | 293

3.2 Example: The Collector with the most system alerts

When operating an Arbor SP/TMS deployment, looking at system errors can helpful in diagnosing performance problems. The following Python program will gather a list of alerts in the alert class “system” and group them by appliance, then print them sorted by the number of alerts for each appliance.

"""Example SP API script to retrieve and summarize device system alerts.""" from __future__ import print_function import arrow # version: 0.10.0 import sys import re import requests # version: 2.18.4 import urllib # version: 1.17 CERT_FILE = './https_active.crt' def get_page_from_link(link): """Extract a page number from an API link.""" match = re.search(r'page=(\d+)', link) return int(match.group(1)) if match else None def api_request(url, key, body=None): """General function for making GET and POST requests. Response codes falling within 'requests.codes.ok' are assumed to have completed successfully. Supplying the optional 'body' parameter will change the request type to POST. Args: url (str): Valid URL to make request to. key (str): API key generated on the given SP leader. body (str): JSON formatted string containing post body content. Returns: dict: 'data' keyvalue of a requests.Response object. dict: 'meta' keyvalue of a requests.Response object. dict: 'links' keyvalue of a requests.Response object. """ headers = {'X-Arbux-APIToken': key, 'Content-Type': 'application/vnd.api+json'} if body is None: api_response = requests.get( url, headers=headers, verify=CERT_FILE) else: api_response = requests.post( url, data=body, headers=headers, verify=CERT_FILE) # Handle any API error responses. if (api_response.status_code != requests.codes.ok): print("API responded with this error: \n{}".format( api_response.text), file=sys.stderr) return ([], {}, {}) # Convert the response to JSON and return it api_response = api_response.json() return (api_response['data'], api_response['meta'], api_response['links']) def get_alerts(leader, key, start_time): """Retrieve alerts from an SP leader. Args: leader (str): SP leader from which the alerts originated key (str): API key generated on the given SP leader start_time (obj): arrow time object to be used a start_time filter for alerts. Returns: list: Alerts from the SP leader. """ alerts = list() # Retrieve the first page of data. (first_page, meta, links) = get_alerts_page(leader, key, start_time, 1) alerts.extend(first_page) # Retrieve first and last page numbers from the returned links. last_page_number = get_page_from_link(links["last"]) current_page_number = get_page_from_link(links["self"]) # Get all remaining pages, add the data while (current_page_number != last_page_number): current_page_number = current_page_number + 1 (current_page, meta, links) = get_alerts_page(leader, key, start_time, current_page_number) alerts.extend(current_page) return alerts def get_alerts_page(leader, key, start_time, page=1): """Retrieve a specific page of alerts from an SP leader. Args: leader (str): SP leader from which the alerts originated key (str): API key generated on the given SP leader start_time (obj): arrow time object to be used a start_time filter for alerts. page (int): The specific page of alerts to request. Returns: list: A specific page of alerts from the leader. """ # Craft the URL components. Filter on alert class and starting time. alert_uri = '/api/sp/v3/alerts/' filter_value = ("/data/attributes/alert_class = system AND " "/data/attributes/start_time > {0}".format( start_time.format('YYYY-MM-DD:HH:mm:ss'))) # Percent-encode our filter query. filter_value = urllib.quote(filter_value, safe='') # Add the parameters to the request url. params = list() filter_param = "filter={0}".format(filter_value) page_param = "page={0}".format(page) params = [filter_param, page_param] url = "https://{0}{1}?{2}".format(leader, alert_uri, "&".join(params)) # Make the api request and return its results. return api_request(url, key) def get_devices(leader, key): """Retrieve devices from an SP leader. Args: leader (str): SP leader to retrieve devices from. key (str): API key generated on the given SP leader Returns: list: Device data from the leader. """ device_uri = '/api/sp/v3/devices/' url = "https://{0}{1}".format(leader, device_uri) # Make the api request and return its results. (devices, meta, links) = api_request(url, key) return devices def main(): """Print a list of devices sorted by system alert count.""" SP_LEADER = 'leader.example.com' API_KEY = 'jrqRzJBV5Ua4t88YWuoTJ8TJsnHBe4qR1phcCvcD' # Create a start date one week before the current time. arrow_now = arrow.utcnow() start_time = arrow_now.shift(weeks=-1) # Get a list of all the system alerts starting later than # one week ago. alerts = get_alerts(SP_LEADER, API_KEY, start_time) # Get a list of all devices on the leader. devices = get_devices(SP_LEADER, API_KEY) # Transform the list into a dictionary keyed by device id. device_dict = {device["id"]: device["attributes"] for device in devices} # Create a dict keyed by device id containing a count # of system errors associated with that device. # We will use this data when displaying our list of collectors. alert_counts = {} for alert in alerts: try: device_id = alert["relationships"]["device"]["data"]["id"] except KeyError: continue alert_counts[device_id] = ( alert_counts[device_id] + 1 if device_id in alert_counts else 1) # Transform the dict into list of dicts containing id # and alert_count for each device. alert_count_list = [{"id": key, "alert_count": value} for key, value in alert_counts.iteritems()] # Sort the list in decending order by alert count. alert_count_list.sort(reverse=True, cmp=lambda x, y: cmp(x["alert_count"], y["alert_count"])) # Display a report of collectors sorted by the number of system alerts # found on each collector. header_format_string = ( "==== {alert_count:=<24} {name:=<20} " "{device_type:=<20} {ip_address:=<20}") # Display a header. print(header_format_string.format(alert_count="System Alert Count ", name="Device Name ", device_type="Device Type ", ip_address="IP Address ")) format_string = ( " {alert_count:<24} {name:20} " "{device_type:20} {ip_address:20}") # Display a row for each device with alerts. for device in alert_count_list: # Roll in our previously retrieved device data. device.update(device_dict[device["id"]]) print(format_string.format(**device)) if __name__ == "__main__": main()

The output from this is a list of SP appliances with the most system alerts, that looks something like:

==== System Alert Count ===== Device Name ======== Device Type ======== IP Address =========

79 my-leader pi 192.168.3.14

42 my-tra1 cp 192.168.3.15

19 my-tra2 cp 192.168.3.16

14 my-tms tms 192.168.3.17

7 my-backupleader pi 192.168.26.32

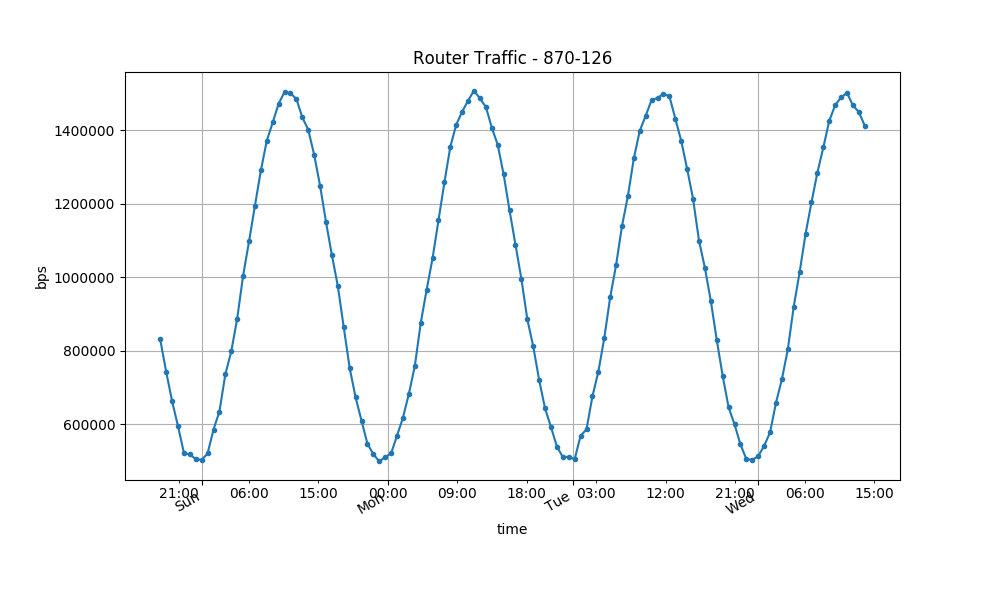

3.3 Example: Plotting Alert Data

The SOAP API can give you PNG files that you can use in your

reports. The REST API does not do that, but you can make your own

using Python and the additional library matplotlib6. This example

also introduces the arrow Python package7, which makes it easier

to deal with dates and time; it claims to offer “a sensible,

human-friendly approach to creating, manipulating, formatting and

converting dates, times, and timestamps” and, in this author’s

opinion, it delivers on that.

The steps to look at this are:

- choose an alert for which the traffic across the router is interesting

- get the

router_trafficdata from the API buy replacing theXin this URL fragment with the alert ID you are interested in:/api/sp/alerts/X/router_traffic/

- extract from the alert information the time-series data for router traffic

- turn the data into a time-series that

matplotlibcan consume and write out a file for each router involved

This Python program demonstrates reading alert data and using

matplotlib to make a plot of that data. The plot it creates

using the sample data monitored by SP is in Figure

6.

Figure 6: The SOAP API to SP can produce PNG files containing plots of alert traffic data. The REST API does not offer that exact functionality, but creating plots using Python and the matplotlib library isn’t too difficult and offers many more output format and styling options for the plots.

from __future__ import print_function from sys import stderr import requests # version 2.18.4 import arrow # version 0.10.0 import datetime import matplotlib # version 2.0.2 matplotlib.use('Agg') from matplotlib import pyplot as plt from matplotlib.dates import DayLocator, HourLocator, DateFormatter, drange ALERT_ID = 870 def render_matplotlib_png(alert_router_id, points, start, end, step): """Render a graph PNG based on timeseries data using matplotlib. Args: alert_router_id: a alert_id-router_gid string points: timeseries traffic data points start: arrow object representing the start time of the alert end: arrow object reprsenting the end time of the alert step: the time period each entry in the timeseries data spans """ # every day days = DayLocator() # every hour hours = HourLocator(interval=9) delta = datetime.timedelta(seconds=step) # calculate x axis points based on step and start time dates = drange(start, end, delta) # using AGG fig = plt.figure(figsize=(10, 6)) ax = fig.add_subplot(111) ax.plot_date(dates, points, '.-') ax.grid(True) ax.xaxis.set_major_locator(days) ax.xaxis.set_minor_locator(hours) ax.xaxis.set_major_formatter(DateFormatter('%a')) ax.xaxis.set_minor_formatter(DateFormatter('%H:%M')) ax.set_xlabel('time') ax.set_ylabel('bps') ax.set_title('Router Traffic - {}'.format(alert_router_id)) fig.autofmt_xdate() fig.savefig('{}.png'.format(alert_router_id)) def api_get_request(url, api_key): """Build an API GET request. Args: url: a valid SP box url to make the request api_key: API token used to access and use the SP API Returns: Dict value for 'data' entry of JSON response """ api_response = None api_response = requests.get( url, headers={ 'X-Arbux-APIToken': api_key, 'Content-Type': 'application/vnd.api+json' }, verify=False ) # Handle API error responses if (api_response.status_code < requests.codes.ok or api_response.status_code >= requests.codes.multiple_choices): print("API responded with this error: \n{}".format(api_response.text), file=stderr) return [] # Convert the response to JSON and return api_response = api_response.json() return api_response['data'] def get_alert_traffic_data_router(sp_leader, api_key, alert_id): """Get router interface Traffic for alert. Args: sp_leader: a valid SP box domain name api_key: API token used to access and use the SP API Returns: The API response """ alert_uri = "/api/sp/alerts/{}/router_traffic/".format(alert_id) url = "https://" + sp_leader + alert_uri # Make API request and reutrn results api_response = api_get_request(url, api_key) return api_response def graph_timeseries_data_per_router(router_traffic): """Grab appropriate data from API response then build graph. Args: router_traffic: the dict value for 'data' entry of JSON response """ if not router_traffic: return for router in router_traffic: data = router['attributes']['view']['network']['unit']['bps'] step = data['step'] # Find the end time. Create arrow datetime objects for start and end. start_time = arrow.get(data['timeseries_start']) seconds_after = (step * len(data['timeseries'])) start, end = start_time.span('second', count=seconds_after) # Build timeseries graph render_matplotlib_png(router['id'], data['timeseries'], start, end, step) return if __name__ == '__main__': sp_leader = 'leader.example.com' api_key = 'JskW5QLVUMkNn4ruVwXOM0hQdyXCtOwnpkOjOev4' print("Getting traffic data for alert {} ...".format(ALERT_ID)) # get router traffic timeseries router_traffic = get_alert_traffic_data_router(sp_leader, api_key, ALERT_ID) print("Rendering graph PNGs...") graph_timeseries_data_per_router(router_traffic) print("Done.")

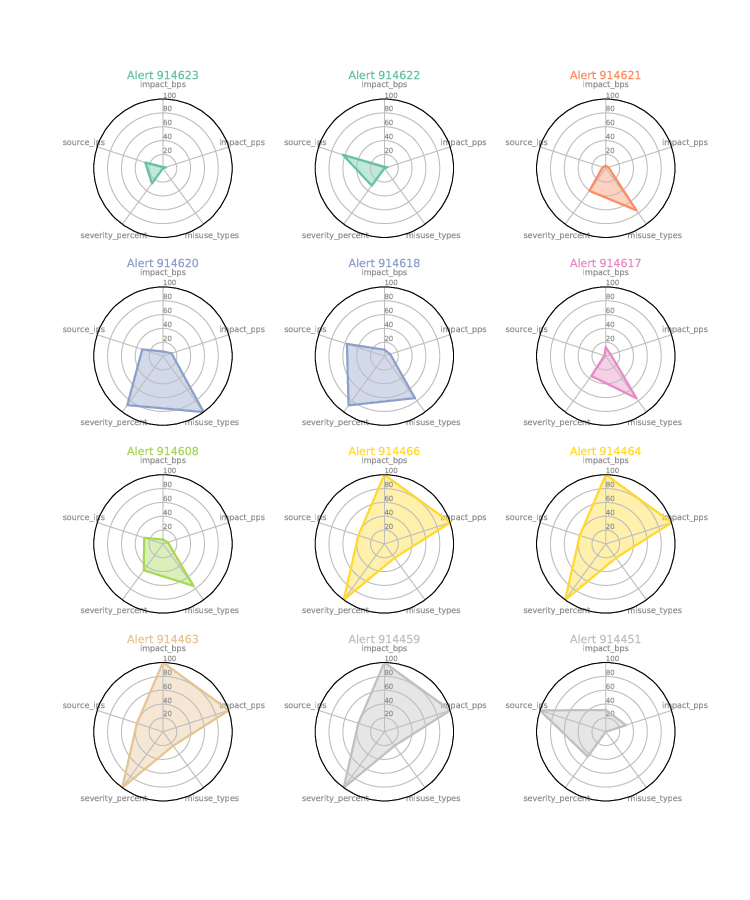

3.4 Example: Using Alert Details to create radar plots of alert characteristics

As of SP8.4 APIv4 the /alerts/ endpoint has the option of

including all of the information that SP collects about alerts. If

you are familiar with the SP UI, this is the data that is used to

create the graphs and tables shown on the alert summary, traffic

details, routers, and annotation tabs of the alert page.

The /alerts/ endpoint includes relationships to information about

the alert; the "relationships" object in the alert includes:

{

"relationships": {

"packet_size_distribution": {

"data": {

"id": "packet-size-distribution-<alert_id>",

"type": "alert_packet_size_distribution"

},

"links": {

"related": "https://spleader.example.com/api/sp/v4/alerts/<alert_id>/packet_size_distribution"

}

},

"patterns": {

"links": {

"related": "https://spleader.example.com/api/sp/v4/alerts/<alert_id>/patterns/"

}

},

"router_traffic": {

"links": {

"related": "https://spleader.example.com/api/sp/v4/alerts/<alert_id>/router_traffic/"

}

},

"source_ip_addresses": {

"data": {

"id": "source-ip-addresses-<alert_id>",

"type": "alert_source_ip_addresses"

},

"links": {

"related": "https://spleader.example.com/api/sp/v4/alerts/<alert_id>/source_ip_addresses"

}

},

"thresholds": {

"links": {

"related": "https://spleader.example.com/api/sp/v4/alerts/<alert_id>/misuse_types_thresholds/"

}

},

"traffic": {

"data": {

"id": "alert-traffic-<alert_id>",

"type": "alert_traffic"

},

"links": {

"related": "https://spleader.example.com/api/sp/v4/alerts/<alert_id>/traffic"

}

}

}

}

each of those relationships can be referenced in turn but, to

minimize the number of HTTP requests required, the /alerts/

endpoint (among other endpoints; see the online API documentation)

also supports the include URL query parameter to include related

data in the first request to the API. To use the include URL

query parameter append ?include=rel_1,rel_2,rel_3 to the API URL,

where each rel_N parameter is the name of the relationship (for

example, source_ip_addresses, thresholds, and traffic). The

results of that API request will include a new list called

included that is objects that comprise the attributes and

relationships objects for each included element. This list has a

format similar to:

{

"included": [

{

"relationships": {

"router": {

"data": {

"type": "router",

"id": "<router_id>"

},

"links": {

"related": "<link_to_router>"

}

},

"parent": {

"data": {

"type": "alert",

"id": "<alert_id>"

},

"links": {

"related": "<alert_id_url>"

}

}

},

"attributes": {

"content": "<alert_pattern_data>"

},

"type": "alert_patterns",

"id": "<alert_id>-router:<router_id+cidr_port",

"links": {

"self": "<self_link>"

}

},

{

"router": {

"data": {

"type": "router",

"id": "<router_id>"

},

"links": {

"related": "<link_to_router>"

}

},

"parent": {

"data": {

"type": "alert",

"id": "<alert_id>"

},

"links": {

"related": "<alert_id_url>"

}

}

"type": "alert_patterns",

"id": "<alert_id>-router:<router_id+cidr_port",

"links": {

"self": "<self_link>"

}

}

]

}

For the alert endpoint, this additional data available via the API can allow for different representations of alerts, and this example gathers some of this data and creates radar or spider plots out of that data, displaying 12 alerts on one page for quick visual analysis of them. It is easy to pick out the alerts that are similar to each other, or those that are unique among the 12.

Figure 7: An example of using radar plots for comparing characteristics of alerts; the alert characteristics selected for this display are impact_bps, impact_pps, severity_percent, misuse_types, and source_ips. The program that makes these can be easily modified to show other alert details in this format.

The Python program to make these plots makes use of a few extra Python packages:

- matplotlib.pyplot

- pandas

both of these can be install using easy_install or pip. For

more information, they each have their own websites:

#!/usr/bin/env python """An example program that collects data on alerts and plots radar (or spider) plots with that data This makes use of the expanded alert data that was added in SP 8.4 APIv4 """ from __future__ import print_function import requests import sys import matplotlib.pyplot as plt import pandas as pd from math import pi, ceil LEADER = 'spleader.example.com' APITOKEN = 'mYsEcReTkEy007' PER_PAGE = 12 ONGOING = True INCLUDE = ['source_ip_addresses'] FILTER = 'filter=/data/attributes/alert_class=dos' RADAR_FIELDS = ['impact_bps', 'impact_pps', 'severity_percent', 'misuse_types', 'source_ips'] def get_alerts(leader, apitoken, per_page, ongoing, include): """Return alerts and included data Fetch `per_page` alerts via the API provided by `leader` using `apitoken`. If `ongoing` is true, only get ongoing alerts. If there are elements in the `include` list, include those in the output making use of the `include` URL parameter to retrieve that data in one HTTP GET request """ url = 'https://{}/api/sp/alerts/?perPage={}&{}'.format( leader, per_page, FILTER ) if ONGOING: url += 'AND /data/attributes/ongoing=True' if include: url += '&include={}'.format(",".join(include)) results = requests.get( url, headers={ "X-Arbux-APIToken": apitoken, "Content-Type": "application/vnd.api+json" }, verify="./certfile" ) if results.status_code != requests.codes.ok: print ("Results: {} ({})".format( results.reason, results.status_code), file=sys.stderr) return None return (results.json()) def make_included_dictionary(alerts): """reorganize the data included with the alert extract the data from included into a dict of dicts that looks like: alert_1: included_field_1: list included_field_2: list alert_2: included_field_1: list included_field_2: list this is one recommended way of using `include`d data: put it into a dictionary by alert ID so later it can be easily related to the alert """ included = dict() for alert in alerts['included']: id = alert['relationships']['parent']['data']['id'] included[id] = dict() for type in alert['attributes']: included[id][type] = alert['attributes'][type] return included def make_spiders(df): """Take a dataframe, produce a file called plot.pdf Use the data in the dataframe and turn it into an array of radar plots and write it to a file; with much thanks to https://python-graph-gallery.com/392-use-faceting-for-radar-chart/ """ max_cols = 3 my_dpi = 96 plt.figure(figsize=(1000/my_dpi, 1200/my_dpi), dpi=my_dpi) plt.subplots_adjust(left=0.125, bottom=0.1, right=0.9, top=0.9, wspace=0.6, hspace=0.2) my_palette = plt.cm.get_cmap("Set2", len(list(df))) # number of variable categories = df.index N = len(categories) rows = ceil(float(len(list(df))) / max_cols) # print ("N: {}; rows: {}".format(N, rows)) # What will be the angle of each axis in the plot? (we divide the # plot / number of variable) angles = [n / float(N) * 2 * pi for n in range(N)] angles += angles[:1] # loop starts here, probably row = 0 for axkey in [str(m) for m in sorted([int(n) for n in df.keys()], reverse=True)]: # print ("{}: {}".format( # axkey, df[axkey].values.flatten().tolist())) # Initialise the spider plot ax = plt.subplot(rows, max_cols, row+1, polar=True, ) # If you want the first axis to be on top: ax.set_theta_offset(pi / 2) ax.set_theta_direction(-1) # Draw one axe per variable + add labels labels yet plt.xticks(angles[:-1], categories, color='grey', size=8) # Draw ylabels ax.set_rlabel_position(0) plt.yticks([20, 40, 60, 80, 100], ["20", "40", "60", "80", "100"], color="grey", size=7) plt.ylim(0, 100) # Ind1 # values = df.loc[row].drop('group').values.flatten().tolist() values = df[axkey].values.flatten().tolist() values += values[:1] ax.plot(angles, values, color=my_palette(row), linewidth=2, linestyle='solid') ax.fill(angles, values, color=my_palette(row), alpha=0.4) # Add a title title = "Alert {}".format(axkey) plt.title(title, size=11, color=my_palette(row), y=1.1) row += 1 plt.savefig('radar.pdf') def get_radar_fractions(data): """ normalize data across the alert properties """ maxval = dict() for alert_id in data: for key in data[alert_id].keys(): if key not in maxval or data[alert_id][key] > maxval[key]: maxval[key] = data[alert_id][key] frac_data = dict() for alert_id in data: frac_data[alert_id] = dict() for key in data[alert_id].keys(): frac_data[alert_id][key] = (float(data[alert_id][key]) / maxval[key]) * 100. return frac_data def get_radar_data(alerts, fields): """given a list of alerts from the API and a list of fields extract the data from each field of each alert and put it into a dictionary with two sub-keys for each field: one with the raw value and one with the value relative to all of the others in the list """ data = dict() included = make_included_dictionary(alerts) for alert in alerts['data']: alert_id = alert['id'] data[alert_id] = dict() for field in fields: if field in alert['attributes']: data[alert_id][field] = alert['attributes'][field] elif field in alert['attributes']['subobject']: data[alert_id][field] = alert['attributes']['subobject'][field] elif alert_id in included and field in included[alert_id]: data[alert_id][field] = included[alert_id][field] else: data[alert_id][field] = 0 # for alert attributes that are not values but are lists of things, # report the length of the list if type(data[alert_id][field]) is list: data[alert_id][field] = len(data[alert_id][field]) return data if __name__ == '__main__': print ("Retrieving alert data", file=sys.stderr) alerts = get_alerts( LEADER, APITOKEN, PER_PAGE, ONGOING, INCLUDE) if not alerts: print ("Did not retrieve any valid DoS " "alerts from most recent {} alerts".format(PER_PAGE), file=sys.stderr) sys.exit(0) print ("Processing radar data for {} alerts".format( len(alerts['data'])), file=sys.stderr) radar_data = get_radar_data(alerts, RADAR_FIELDS) radar_fractions = get_radar_fractions(radar_data) df = pd.DataFrame(radar_fractions) print ("Plotting radar data", file=sys.stderr) make_spiders(df) print ("Done")

3.5 Example: Attacks Grouped by CIDR Block

Arbor Networks SP accumulates and stores a lot of data about network traffic and distributed denial of service (DDoS) attacks and presents this data in ways that have proven useful to network engineers and network security analysts. That data can be used in many other ways by extracting it via the REST API and applying information specific to your needs.

This example simply takes IP addresses from the alert data,

aggregates them into CIDR blocks of a configurable size, and prints

a simple report. It uses the ipaddress8 Python library and

is written for Python2; ipaddress is included with Python3, so

this program is easily adapted for Python3.

When run, this program recursively calls its function

get_attacked_addresses to step through the pages of data from the

/alerts/ endpoint until the last item in the list of alerts on

the current page is outside of the specified date rang, then puts

those addresses into IPv4 or IPv6 CIDR blocks and counts them,

finally printing a simple report. All of that looks like this:

paging to page 2; # addresses so far: 0

paging to page 3; # addresses so far: 35

paging to page 4; # addresses so far: 72

paging to page 5; # addresses so far: 100

paging to page 6; # addresses so far: 108

paging to page 7; # addresses so far: 126

# addresses found between 2018-05-23T12:00:00+00:00 and 2018-05-23T23:00:00+00:00: 126

--------------------------+----------

Subnet | # Attacks

--------------------------+----------

10.100.2.0/24 | 1

149.39.0.0/24 | 6

149.81.12.0/24 | 11

151.107.2.0/24 | 5

216.175.102.0/24 | 18

3ffe:1:14:20::/116 | 60

70.107.137.0/24 | 10

85.94.160.0/24 | 15